Causal inference in Python is a powerful approach to understand cause-effect relationships, enabling data-driven decisions. Tools like DoWhy and CausalML simplify analysis, while resources like eBooks and self-study guides provide comprehensive learning pathways.

What is Causal Inference?

Causal inference is a statistical and scientific approach to determine cause-effect relationships between variables. It goes beyond mere correlation by identifying how interventions or changes in one variable directly affect others. Unlike traditional statistical methods that focus on associations, causal inference aims to uncover underlying mechanisms, enabling predictions under hypothetical scenarios. Key challenges include confounding variables, selection bias, and ensuring valid assumptions for causal claims. Techniques like randomized experiments, instrumental variables, and propensity score matching are commonly used to establish causality. In Python, libraries such as DoWhy and CausalML provide robust frameworks for implementing these methods, making causal inference accessible for data scientists. Accurate causal reasoning is essential for informed decision-making across industries.

Importance of Causal Inference in Data Science

Causal inference is crucial in data science as it enables researchers to move beyond correlations and uncover true cause-effect relationships. This is essential for making informed decisions, predicting outcomes, and evaluating interventions. Unlike descriptive analytics, causal inference provides actionable insights by identifying how changes in one variable directly impact others. It is particularly valuable in scenarios where randomized experiments are not feasible. In fields like healthcare, policy-making, and business strategy, causal inference helps quantify the impact of decisions, ensuring resources are allocated effectively. By addressing confounding variables and selection bias, it strengthens the validity of conclusions. Python libraries like DoWhy and CausalML simplify causal analysis, empowering data scientists to tackle complex problems with precision and confidence.

Key Concepts: Potential Outcomes and Confounding Variables

Potential outcomes are a fundamental concept in causal inference, representing the results that would be observed under different treatment scenarios. This framework allows researchers to define causal effects by comparing outcomes across treatment and control groups. Confounding variables, however, pose a significant challenge. These variables are associated with both the treatment and the outcome, leading to biased estimates of causal effects. Addressing confounding requires methods like matching, stratification, or adjustment to ensure fair comparisons. In Python, libraries such as DoWhy and CausalML provide tools to identify and adjust for confounding variables, enabling more accurate causal analysis. Understanding these concepts is essential for valid causal inference in data science applications.

Core Concepts and Models

Causal models, including Directed Acyclic Graphs (DAGs), formalize cause-effect relationships. Interventions and counterfactuals are central to understanding causality. Python libraries like DoWhy and CausalML enable practical implementation.

Pearlian Causal Concepts and DAGs

Pearlian causal concepts, developed by Judea Pearl, provide a foundational framework for understanding causality using Directed Acyclic Graphs (DAGs). These graphs visually represent causal relationships, with nodes as variables and edges as causal links. Pearl’s do-calculus enables reasoning about interventions and counterfactuals, essential for causal inference. In Python, libraries like DoWhy and CausalGraph implement these concepts, allowing users to construct and analyze DAGs. These tools help identify confounding variables, test causal assumptions, and estimate effects. Pearlian methods are particularly valuable for structural models, enabling researchers to move beyond correlation to true causation. By integrating these concepts into Python workflows, data scientists can leverage robust causal reasoning in their analyses.

Bayesian and Causal Approaches to Inference

Bayesian methods offer a probabilistic approach to causal inference, allowing researchers to incorporate prior knowledge and uncertainty. By leveraging Bayes’ theorem, these methods update beliefs based on observed data, providing a flexible framework for estimating causal effects. In Python, libraries like PyMC3 and Arviz enable Bayesian modeling, which can be applied to causal problems. Bayesian networks, representing causal relationships through directed acyclic graphs (DAGs), align with Pearlian concepts. These models are particularly useful for handling missing data and complex distributions. Tools like causalml and dowhy integrate Bayesian approaches, enhancing causal analysis robustness. By combining Bayesian and causal methods, data scientists can better address uncertainty and incorporate prior information, leading to more accurate causal inferences in various applications.

Structural Causal Models (SCMs)

Structural Causal Models (SCMs) provide a framework to represent causal relationships using directed acyclic graphs (DAGs) and structural equations. These models define how variables are generated through causal mechanisms, enabling interventions and counterfactual reasoning. SCMs are foundational in causal inference, as they formalize cause-effect relationships and allow for the identification of confounders and mediators. In Python, libraries like DoWhy and CausalGraph support the implementation of SCMs, enabling researchers to estimate causal effects and test interventions. By explicitly modeling the data-generating process, SCMs offer a transparent and interpretable approach to uncovering causal structures. This makes them invaluable for addressing complex causal questions in various domains, from healthcare to social sciences.

Causal Inference Methodologies

Causal inference methodologies include randomized controlled trials (RCTs), observational studies, and causal graph analysis. These methods help establish cause-effect relationships, enabling informed decision-making in data science applications.

Randomized Controlled Trials (RCTs)

Randomized Controlled Trials (RCTs) are the gold standard for establishing causality. By randomly assigning subjects to treatment or control groups, RCTs minimize bias and confounding variables, ensuring robust causal conclusions. In Python, RCTs can be implemented using libraries like random for group assignments and pandas for data manipulation. Key steps include defining the treatment and control conditions, ensuring randomization, and analyzing outcomes using statistical methods. Python’s statsmodels and scipy libraries provide tools for hypothesis testing and regression analysis, which are critical for determining treatment effects. RCTs are widely used in healthcare, social sciences, and tech for evaluating interventions, making them a cornerstone of causal inference methodologies in Python-based data science workflows.

Instrumental Variables and Propensity Score Matching

Instrumental Variables (IV) and Propensity Score Matching (PSM) are powerful methods for causal inference when randomization is not feasible. IV uses an instrument—a variable correlated with the treatment but unconfounded by the outcome—to estimate causal effects. PSM matches treated and control units based on their propensity scores, balancing observed covariates. In Python, libraries like statsmodels support IV analysis, while pandas and scikit-learn facilitate PSM implementation. These methods are particularly useful for observational data, helping to mitigate confounding and selection bias. By combining IV and PSM, researchers can strengthen causal claims in scenarios where RCTs are impractical, making these techniques indispensable in causal inference workflows.

Causal Structure Learning and Discovery

Causal structure learning involves identifying causal relationships between variables from data. Techniques like Bayesian networks and constraint-based methods help infer causal graphs, showing direct causal links. Bayesian networks model probabilistic relationships, while constraint-based methods use statistical tests to detect independence and infer causality. Python libraries such as causalgraph and DoWhy provide tools for this, enabling users to estimate causal structures. Accurate causal discovery aids in distinguishing confounders, mediators, and spurious associations, crucial for unbiased causal effect estimation. This process is vital for understanding underlying mechanisms and is a key step in many causal inference workflows, enhancing the validity of causal claims.

Tools and Libraries for Causal Inference in Python

Popular Python libraries for causal inference include DoWhy, CausalML, and CausalGraph. These tools simplify causal analysis, offering methods for causal graph estimation and treatment effect calculation.

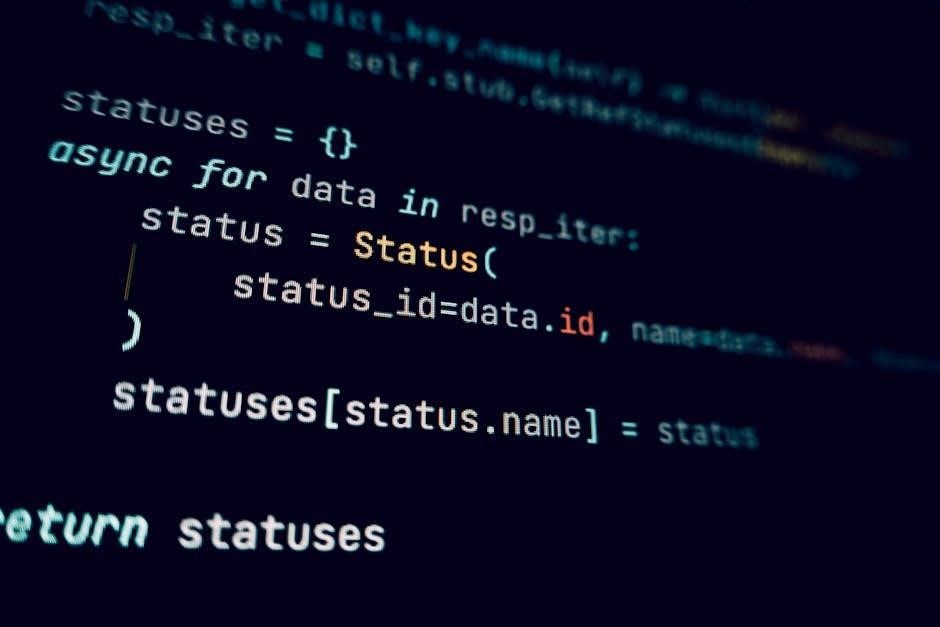

DoWhy and CausalML are powerful Python libraries designed for causal inference. DoWhy provides a simple, unified framework for causal analysis, enabling users to estimate causal effects and test hypotheses. It supports automated causal graph estimation, robustness checks, and effect estimation using various methods like instrumental variables. CausalML, on the other hand, focuses on treatment effect estimation, leveraging machine learning models to adjust for confounders. It integrates seamlessly with popular libraries like scikit-learn and pandas, making it accessible for practitioners. Both libraries streamline causal inference workflows, offering tools for data scientists to draw actionable insights from observational data. They are widely adopted in industry and research for their ease of use and robust functionality.

Using CausalGraph for Causal Discovery

CausalGraph is a Python library tailored for causal discovery, enabling users to uncover causal relationships within datasets. It provides robust algorithms for constructing and analyzing causal graphs, essential for understanding complex causal structures. Key features include automated causal structure learning, implementation of prominent algorithms like PC and GES, and visualization tools for Directed Acyclic Graphs (DAGs). Its user-friendly design and seamless integration with popular data science libraries such as pandas and scikit-learn make it a valuable tool for data scientists. CausalGraph simplifies the process of translating data into actionable insights, aiding in real-world applications where understanding causality is crucial. Its effectiveness in practical scenarios makes it a standout choice for those exploring causal inference in Python.

ThinkCausal: A Tool for Causal Inference and Learning

ThinkCausal is an innovative Python library designed to streamline causal inference workflows. It offers an intuitive interface for performing causal analysis, making it accessible to both beginners and advanced practitioners. The library provides automated tools for causal effect estimation, counterfactual reasoning, and policy evaluation. ThinkCausal also supports interactive learning through visualizations and simulations, helping users understand causal relationships more effectively. Its modular design allows integration with other libraries like pandas and scikit-learn, enhancing its versatility. By focusing on simplicity and clarity, ThinkCausal democratizes access to causal inference, enabling data scientists to apply these methods in real-world scenarios without extensive expertise. This makes it a valuable resource for both research and educational purposes in Python-based data science workflows.

Applications of Causal Inference

Causal inference enables understanding cause-effect relationships, informing decision-making across industries. It enhances policy evaluation, algorithmic fairness, and personalized interventions, providing actionable insights in data-driven environments.

Causal Inference in the Tech Industry

Causal inference is widely applied in the tech industry to guide decision-making and optimize outcomes. Companies use it to analyze the impact of product features, algorithmic changes, and user interventions. For instance, tech firms employ causal methods to determine the effect of A/B testing on user engagement or revenue. Tools like DoWhy and CausalML enable robust causal analysis, helping businesses identify true cause-effect relationships. Additionally, causal inference aids in personalization, fraud detection, and algorithmic fairness. By leveraging techniques such as propensity score matching, tech companies can uncover hidden patterns and make data-driven decisions. This approach ensures that innovations are grounded in reliable, causal insights rather than mere correlations.

Healthcare and Medical Research Applications

Causal inference plays a vital role in healthcare and medical research, enabling researchers to determine the causal effects of treatments, drugs, and policies. It helps in identifying whether a specific intervention leads to improved patient outcomes, even in the absence of randomized controlled trials. For instance, causal methods are used to analyze the effectiveness of new medications or surgical procedures. In personalized medicine, causal inference aids in tailoring treatments to individual patient characteristics. Additionally, it is instrumental in evaluating public health policies, such as vaccination programs or disease prevention strategies. By applying Python libraries like DoWhy and CausalML, researchers can uncover causal relationships, driving evidence-based decision-making in healthcare.

Social Sciences and Policy Evaluation

Causal inference is transformative in social sciences and policy evaluation, enabling researchers to assess the impact of interventions and policies on societal outcomes. It helps determine whether programs, such as educational initiatives or poverty reduction strategies, achieve their intended effects. By addressing confounding variables, causal methods provide insights into the root causes of social phenomena, such as inequality or crime rates. In policy evaluation, techniques like instrumental variables and propensity score matching are used to estimate causal effects when randomized experiments are not feasible. Python libraries like DoWhy and CausalML facilitate these analyses, empowering researchers to inform evidence-based policy decisions and drive societal progress through data-driven approaches.

Learning Resources and Guides

Explore comprehensive resources like eBooks, tutorials, and self-study guides to master causal inference in Python. Utilize libraries like DoWhy and CausalML for hands-on practice and real-world applications.

Causal Inference in Python: A Self-Study Guide

A self-study guide for causal inference in Python offers a structured approach to mastering causal reasoning and analysis. Start with foundational concepts, such as potential outcomes and confounding variables, using resources like the DoWhy handbook. Install essential libraries such as DoWhy, CausalML, and CausalGraph to practice hands-on. Work through tutorials on propensity score matching and instrumental variables. Explore real-world datasets to apply techniques like causal structure discovery. Reference PDF guides and documentation for step-by-step implementation details. Engage with communities like GitHub repositories and forums for troubleshooting. This guide provides a clear pathway to proficiency in Python-based causal inference, ensuring practical and theoretical understanding.

Recommended Books and eBooks on Causal Inference

For in-depth learning, several books and eBooks on causal inference are highly recommended. “Causal Inference: The Mixtape” by Scott Cunningham provides an accessible introduction to core concepts. “Causal Inference in Statistics: A Primer” by Pearl, Glymour, and Jewett offers a comprehensive overview. “Causality: Models, Reasoning, and Inference” by Pearl is a seminal work for advanced understanding. For Python-specific applications, “Python for Data Analysis” by Wes McKinney complements causal inference practices. Additionally, eBooks like “Causal Inference in Python” offer practical guides tailored to Python implementations. These resources provide theoretical foundations and practical examples, making them invaluable for mastering causal inference in Python.

Online Courses and Tutorials for Causal Inference

Several online courses and tutorials provide hands-on training in causal inference. Coursera offers “Causal Data Science” by the University of Cambridge, covering foundational concepts. edX features “Causal Inference” by Columbia University, focusing on statistical methods. For Python-specific learning, DataCamp provides interactive tutorials on causal inference with Python libraries. Additionally, DoWhy offers official tutorials for its framework, and Udemy courses like “Causal Inference in Python” cover practical implementations. These resources combine theoretical insights with coding exercises, making them ideal for learners aiming to apply causal inference in real-world scenarios using Python.

Challenges and Limitations

Causal inference faces challenges like confounding variables, assumption violations, and sensitivity to model misspecification, requiring careful data collection and robust methods to ensure valid conclusions.

Common Pitfalls in Causal Inference

Causal inference often encounters pitfalls such as ignoring confounding variables, which can lead to biased results. Another common issue is assuming random assignment in non-experimental data, causing invalid conclusions. Additionally, selection bias and reverse causality frequently distort causal relationships. Overreliance on statistical models without domain knowledge can result in incorrect interpretations. Lastly, failing to account for temporal precedence or mediation effects further complicates causal analysis. Addressing these challenges requires careful data collection, robust methodologies, and sensitivity analyses to ensure valid and reliable causal insights.

Interpretation and Sensitivity Analysis

Interpreting causal effects requires careful consideration of the underlying assumptions and data. Sensitivity analysis plays a crucial role in assessing how robust causal estimates are to potential violations of these assumptions, such as unobserved confounding or measurement error. Techniques like worst-case bounds or simulation-based approaches help quantify the impact of unmeasured factors. In Python, libraries like DoWhy and CausalML provide tools for sensitivity analysis, enabling researchers to test the reliability of their findings under varying scenarios. Regular sensitivity checks ensure that causal inferences are not overly sensitive to specific assumptions or data peculiarities, fostering trust in the conclusions drawn from the analysis.

Information Overload and Search Strategies

With the exponential growth of resources on causal inference, navigating the wealth of information can be daunting; Practitioners often face challenges in identifying relevant materials, especially when focusing on Python-specific implementations. To combat information overload, adopt targeted search strategies, such as filtering by publication date or relevance to Python. Prioritize resources like the “Causal Inference in Python” guide, which offers practical implementations. Use specific keywords and rely on community recommendations to streamline your learning process. Additionally, focus on foundational concepts before exploring advanced methodologies, ensuring a structured approach to mastering causal inference in Python.

Future Directions and Trends

Advances in AI and machine learning are reshaping causal inference, enabling more robust analysis. Python’s expanding toolkit supports cutting-edge applications, fostering innovation in data science and research.

Emerging Trends in Causal Inference

Recent advancements in causal inference emphasize Bayesian methods, deep learning integration, and automated causal discovery. Python libraries like DoWhy and CausalML now incorporate these trends, enabling scalable causal analysis. Transfer learning and multi-modal data integration are gaining traction, allowing researchers to apply causal models across diverse domains. Additionally, there is a growing focus on interpretable causal models, ensuring transparency in complex systems. The rise of causal structure learning and dynamic causal graphs further enhances the ability to model real-world complexities. These trends are supported by Python’s robust ecosystem, making it a hub for innovation in causal inference. As a result, Python is becoming indispensable for implementing cutting-edge causal methodologies and fostering interdisciplinary research.

AI and Machine Learning Integration

The integration of AI and machine learning with causal inference has revolutionized how we analyze causal relationships. Python libraries like TensorFlow and PyTorch enable deep learning models to incorporate causal reasoning, enhancing predictive accuracy. Techniques such as causal representation learning and neural causal models are being explored to handle complex, non-linear relationships. Additionally, automated causal discovery using AI accelerates the identification of causal structures from data. Python’s extensive ecosystem supports these advancements, making it a central platform for merging machine learning with causal methodologies. This integration is particularly valuable in scenarios with large datasets, enabling scalable and robust causal analysis. As AI evolves, its synergy with causal inference promises to unlock new insights across diverse domains.

The Role of Causal Inference in Modern Data Science

Causal inference plays a pivotal role in modern data science by enabling the understanding of causal relationships rather than mere correlations. It addresses critical questions such as “what if” scenarios, making it essential for decision-making. Python, with libraries like DoWhy, CausalML, and CausalGraph, has become a cornerstone for implementing causal analysis. These tools provide robust methods for identifying confounders, estimating treatment effects, and validating causal assumptions. In industries like healthcare, tech, and social sciences, causal inference aids in evaluating policies, predicting outcomes, and optimizing interventions. Its ability to uncover actionable insights makes it indispensable in data-driven environments, driving informed strategies and fostering innovation.

No Responses